The reference news network reported on December 17. The US media said thatWeapons that can kill people without direct human participation, the so-called "lethal autonomous weapons" will soon appear.

According to the US Forbes biweekly website reported on December 15, on September 14, 2019, a small number of drones attacked the Hureis oil field and the Bougueg refinery in Saudi Arabia. In addition to disrupting 5% of the world’s crude oil production, the attack also highlighted some challenges brought by the use of these remote-controlled weapons.

The report said that the first is that it is difficult to be accountable. Nearly three months after the incident, it is still unclear who made the attack. Like hackers in cyber warfare, the attackers left almost no trace. Another problem is that such weapons are small in size and relatively low in cost, so they may be attractive to a small group of militants or terrorists and some countries.

The report said that this is scary enough. However, in view of the current development direction of the arms industry, these concerns cannot be compared with those that may arise in the future.

As the Dutch NGO "Peace" pointed out in a report published a few weeks ago, the world is on a dangerous path, and those weapons that can kill people without direct human participation, the so-called "lethal autonomous weapons", will soon appear. The more common name of this kind of weapon is "killing robot".

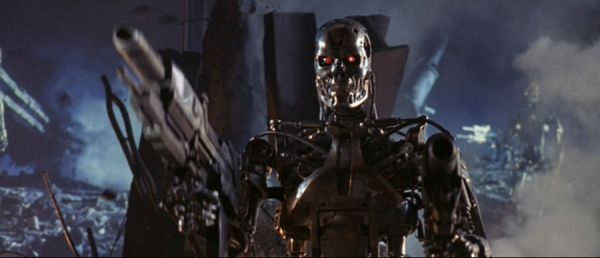

According to the report, this nickname is misleading to some extent, reminiscent of fantasy things such as "Terminator" in science fiction movies. In fact, these tools are similar to more traditional deadly devices: unmanned combat aircraft, tanks, submarines; What has changed is not the shape of the weapon, but its degree of autonomy. At present, completely autonomous offensive weapons have not been deployed in actual combat, but machines are becoming more and more advanced, and the dependence on human control is decreasing.

Profile picture: T-800 hunting robot in the sci-fi movie Terminator. (Forbes biweekly website)

Profile photo: The Turkish "Cargou" attack four-axis UAV was unveiled at the exhibition. (Turkish Ministry of Defence website)

One example is the "Cargou" autonomous attack four-axis UAV developed by the Turkish state-owned enterprise STM. According to reports, it will be deployed in the border area adjacent to Syria in eastern Turkey in 2020. According to an article in the Turkish newspaper Freedom, each "Cargou" fleet will consist of 30 drones, all of which are equipped with artificial intelligence and facial recognition systems. Depending on the coordinates or images of the target, these Cargou UAVs will be able to complete all tasks autonomously. Fortunately, human operators can still choose to "abort the mission" and "return to the base" at all stages of the drone attack.

According to the report, Russia’s T-14 Amata main battle tank is equipped with unmanned turrets and computerized systems, so it is often regarded as another equipment case that will soon achieve complete autonomy-although some people doubt whether this will really make it more effective on the battlefield.

Perhaps not coincidentally, countries such as Russia, Israel and the United States strongly oppose banning the development of "killing robots" in advance. The United Nations discussed the issue of pre-banning such weapons at a meeting in Geneva in November.

As The New York Times pointed out in a recent special report, the measures to ban blinding laser weapons in advance were successful. Therefore,Theoretically, this ban may also apply to "killing robots". The general public awareness and strong support for the ban may change the situation, which is what is missing at present.

[Extended reading] Lifting the ban on "killing robots"? The US military AI development strategy involves ethical disputes.

The reference news network reported on February 15. According to the British "Daily Mail" website reported on February 14th, the US military hopes to expand the application of artificial intelligence (AI) in the war, but said that it will pay attention to the deployment of this technology according to national values.

According to the report, in a 17-page report, the Pentagon explained how to inject artificial intelligence into future military operations and how to keep up with the technological progress of Russia and China in the future.

The report entitled "Using Artificial Intelligence to Promote Our Security and Prosperity" marks the first time that the Pentagon has formulated a strategy on how to deal with the rise of artificial intelligence.

The strategic plan calls for accelerating the use of artificial intelligence systems in the whole army and carrying out a series of tasks, from intelligence gathering actions to predicting maintenance problems of fighter planes or ships. It urges the United States to rapidly advance such technologies before they are weakened by other countries.

"Other countries, especially China and Russia, are also investing heavily in artificial intelligence for military purposes, including those applications that have been questioned by the outside world in terms of international norms and human rights," the report said.

The report makes little mention of automated weapons, but quotes a military directive in 2012 that requires manual control. A few countries, including the United States, have been obstructing the international community from setting a ban on "killing robots" at the United Nations. One day, this completely autonomous weapon system may wage war without human intervention.

The United States believes that it is too early to try to regulate "killing robots".

The report believes that the strategic report released by the Pentagon this week focuses on more immediate applications, but even some of them have triggered ethical debates.

After Google’s internal protests led the technology company to withdraw from the Maven project last year, the Pentagon encountered obstacles in the field of artificial intelligence. Maven project is to analyze aerial images of conflict areas by using algorithms.

Other companies are trying to fill this vacuum, and the Pentagon is working with artificial intelligence experts in industry and academia to formulate ethical guidelines for their artificial intelligence applications.

Todd Probert, vice president of artificial intelligence department of Raytheon, said: "Everything we see is decided by human decision makers."

Probert said: "The project is using technology to help speed up this process, but it has not replaced the existing command structure."

(2019-02-15 10:29:27)

[Extended reading] Expert warning: Killing robots may lead to an arms race or lead to the massacre of civilians.

The reference news network reported on November 21. The British "Star Daily" website published a report entitled "The Killing Robot Arms Race" on November 17th.

An artificial intelligence expert revealed that the United States, Britain, Russia, Israel and other countries are involved in a competition to build a killing robot army.

From self-shooting guns and drones to remote-controlled super tanks, prototype robots in the United States and Russia have been successfully built and ready to go. And humanoid robots on the battlefield will only appear in a few decades-and outline a terrible prospect for the world.

Noel sharky, honorary professor of artificial intelligence and robotics at the University of Sheffield in the UK, told SciDev.Net: "An arms race has begun."

Sharky said: "What we are seeing now is that the United States, Israel and Russia (the killing robot arms race) has begun. If these things appear, then they may accidentally trigger war at any time. "

He also said: "How can we ban them? You can put these things on hold forever, but they only need to be recharged to start again. "

Nowadays, artificial intelligence robots are setting off a craze around the world. Professor sharky is worried that the research and development of killing robots may also have such an amazing speed. Therefore, he is leading a global movement to ban killing robots.

After this website disclosed the latest development-American robots do backflips-the expert warned that the latest project in Russia was "absolutely frightening".

Sharky said: "There is something terrible … The Russians have developed this thing called’ super tank’, and it is the most advanced tank in the world, which is much ahead. It is definitely a monster with great destructive power, and they can remotely control it. They are still trying to turn it into a fully automatic robot at the fastest speed. "

Although autonomous armed robots are ahead of humanoid robots for many years, Professor sharky is worried that the possible humanoid robots may bring disaster to the world-leading to the massacre of civilians.

When warning about the terrible consequences of killing robots, Professor sharky said: "This can’t be in line with the laws of war. Can they distinguish whether a target is a military target or a civilian target? This is the key to the law of war. "

He said: "It is also not allowed to kill people who try to surrender. I know that many people think that surrender means raising a white flag, but actually surrender means any gesture that shows that you are no longer fighting, such as lying on the ground unarmed … but robots can’t distinguish this. My biggest personal concern is what kind of damage this will cause to global security-because an arms race for such weapons has already begun. "

Profile picture: American robot that can do backflips. (British "Star Daily" website)

(2017-11-21 10:14:24)

[Further reading] German media said that the United Nations wanted to restrain the development of "killing robots" so far without results.

The reference news network reported on November 16. According to the German Daily Mirror website reported on November 13th, in the near future, completely autonomous killing robots will decide people’s life and death on the battlefield. Discussions on prohibiting or at least restricting the use of such weapon systems have not stopped in recent years, but so far there is no result. The United Nations recently began discussions on this issue in Geneva.

What is a killing robot?

Its official name is "lethal autonomous weapon system". At present, there is no universally valid definition. The United Nations and the International Red Cross explain it this way: it can set targets, launch attacks and clear targets without human help. In other words, once programmed, these weapon systems can independently analyze data, navigate to the combat area and use weapons such as cannons or rockets. In recent years, the great progress in the field of artificial intelligence constitutes the basis for the development of killing robots.

The drone that is already in use is still controlled by soldiers, so it is not a killing robot. Although it doesn’t seem far away from its independent operation.

How far has it developed?

Russian Deputy Prime Minister rogozin, who is in charge of armament, released a video this spring, in which a humanoid killing robot like Terminator can be seen. This is more of a propaganda category. It doesn’t matter whether the appearance is similar to that of human beings. Experts from Human Rights Watch believe that "the autonomy of weapon systems developed by the United States, Britain, China, Israel, Russia and South Korea is increasing". At present, there are already prototypes in use.

Supporters argue that it will minimize the danger of soldiers. But this may lead to the lowering of the threshold and the increase of wars-thus leading to more civilian casualties. People can already see this development in the use of remotely controlled drones.

The picture shows a military drone equipped in the United States.

Who will bear the consequences?

This is a fundamental ethical issue that is inseparable from the killing robot. Ethical and moral actions, the distinction between good and evil, right and wrong, the evaluation of the consequences, and the responsibility for one’s own actions all do not exist in future wars. Although the robot has artificial intelligence, it has no feelings such as pity or regret, and it has no sense of guilt. It has no way to start with concepts such as moderation. But this is written in the so-called law of war: the parties to the conflict must avoid unnecessary damage and unnecessary pain to each other. We must be kind to civilians, injured, incapacitated and captured soldiers. Experts believe that this cannot be programmed. In 2013, policy experts warned in a report to the United Nations Commission on Human Rights that these "tireless war machines" may turn armed conflicts into endless wars-diplomacy will not be able to stop the killing of machines dedicated to fighting even if there is no hope.

How to establish legal norms?

So far, no. This UN conference will not legislate, but will only explore its political, ethical and legal space in a "political statement". A ban is out of the question.

Since these systems do not actually exist at present, Germany and France propose to define autonomous weapon systems first. A group of technical experts, including military representatives, will explain the risks of new weapons. The representative of the "International Campaign to Stop Killing Robots" thinks that this is not enough, and these weapons must be banned in advance.

Only 19 countries have asked for a ban. Behind the scenes, it is precisely the countries that are leading in integrating artificial intelligence with combat equipment. Recently, more than 100 experts wrote in an open letter that killing robots are the third combat revolution after gunpowder and nuclear bombs. If new weapons fall into the hands of tyrants and terrorists, what awaits people will be complete terror.

(2017-11-16 09:31:00)

关于作者